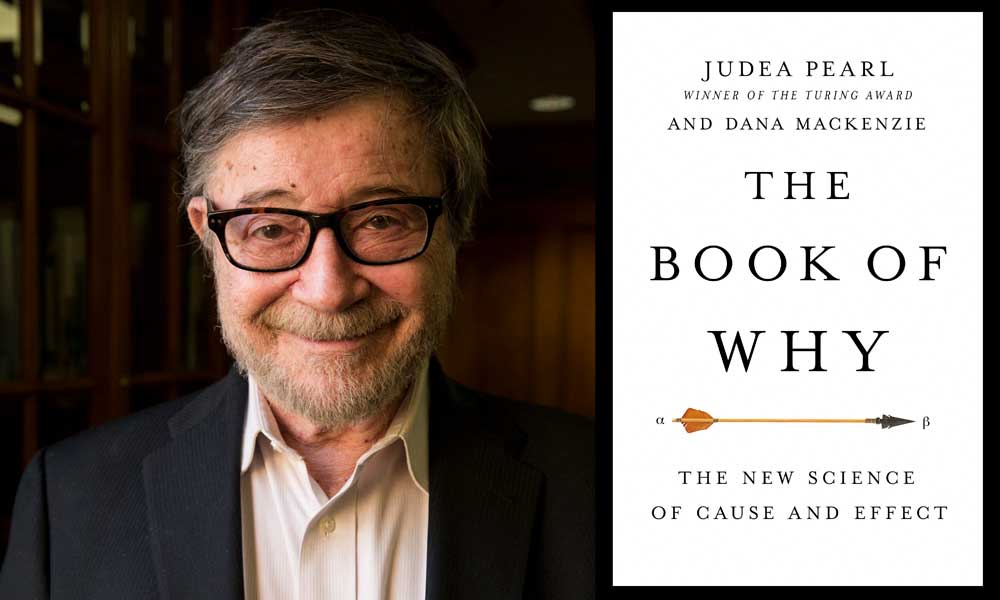

Does the rooster’s crow cause the sunrise? The answer seems obvious, if you’re a human—but a machine can only understand that the rooster’s crow and the sunrise are related, not which causes the other. This problem is at the heart of The Book of Why, written by Israeli-born UCLA professor Judea Pearl, 82, and science writer Dana Mackenzie. Pearl is a renowned computer scientist and winner of the Turing Award, the highest honor in computer science, and his new book explores a revolutionary new way for scientists to explain cause and effect—and how computers should understand it. Pearl speaks with Moment about the importance of cause-and-effect relationships, why science has neglected them for so long and how they shape everything from elections to Jewish ethics.

Why are cause-and-effect relationships so critical—and so misunderstood?

They are misunderstood because science was not kind to us; it has not given us a language to deal with them. Take the connection between the barometer reading and atmospheric pressure. We know that atmospheric pressure affects the barometer reading, not the other way around. If I fiddle with a barometer, the weather wouldn’t care a bit. Yet, as strange as it sounds, we cannot express this obvious fact mathematically. If you try to put it into an equation, the equation will deceive you. Equations just tell you that if you know some quantities, then the next one is determined—but you don’t know which affects which, because the equations of physics are symmetric.

We’ve developed a language that allows computers to reason like us. This will allow computers to assist those who are constantly on the lookout for cause and effect, such as researchers in the healthcare sciences, as well as in the social sciences. They care about improving drugs, they care about whether a drug is going to prevent a disease or harm your liver. They are concerned with narrowing economic inequality and slowing global warming. But until very recently, they didn’t have a language in which to express their scientific knowledge and translate it into causal questions and conclusions. Once you are able to express your scientific knowledge mathematically, you can combine it with data and come up with conclusions that answer your questions.

What does “causal inference” mean, and how can we use it to make decisions?

It is the GPS in our brain that makes us pause before making a decision and asks: Do you really want to do it? What about the alternatives? Most decisions are made at an intuitive level. For instance, when we go to work, we face the same decision every day: Should I take this or that route? We make such decisions on the basis of past experience. However, if someone told you there is construction on Washington Avenue, you pause and think. To download the new information into your thinking mind, you must invoke a model of city streets. You say, “What options do I have to go around it?” Based on what your mental model tells you, you predict the travel time of various routes, and then off you go. This process is called causal inference or causal reasoning, because it involves envisioning the effects of your actions.

Why does understanding causal inference matter for non-scientists?

Most people are curious about the workings of their own mind, especially when you’re talking about a piece of mental machinery that they didn’t know exists: the machinery responsible for understanding cause and effect relationships.

People are also being bombarded today with all kinds of new information: new drugs, coffee is good for you, red meat is bad for you and so on. And they ought to know how these causal claims came about and be critical about who studied them, under what conditions, and whether those studies bear on you as an individual.

In addition, now that we can emulate cause-effect processes on a computer, we’re going to learn so much more about ourselves. For instance, we’re going to learn about free will: What is it? Is it an illusion, a sensation or a gift from God? When we equip a computer with new capabilities—say, free will—we’re going to learn about how the mind enables those capabilities.

How does Judaism approach causal relationships?

Look at Adam and Eve. When Adam and Eve ate from the tree of knowledge, the first thing they found out was that they were naked, but the very next thing they learned was to blame each other. And blaming is the juice of causal thinking. “Did you eat from the tree?” God asks. Adam says, “She enticed me to eat.” And Eve says, “Me? It was the serpent.”

Notice that God asks for facts, and Adam and Eve give him explanations. They just ate from the tree of knowledge, and what have they learned? Not to recount facts, but to understand the ropes between the facts. Adam could have said, “Yes, I ate” or, “No, I didn’t eat.” But he understood that God was really asking for a deeper answer: Why did you eat?

Jewish ethics is built on stopping the blame game and assigning personal responsibility for one’s actions. This clashes, of course, with the idea of an omniscient God. God can predict your actions, and you still have freedom to choose. This is an old philosophical problem that Judaism solves, as always, by repeating the question. We don’t know how it works, but we are taught to believe that we have control over our actions and that God, despite the contradiction, can predict our actions.

Today, God is replaced by the laws of physics, and we are facing much more concrete questions. We are close to solving the dilemma by opening people’s brains and finding out where the illusion of free will resides. We are also close to equipping computers with free will, taking it apart and seeing how it ticks. Other interesting questions are: Why should we do it? What are we going to gain? Would robots be smarter, more communicable, if they had free will? Would they enrich our lives? I don’t know if we should ask the rabbis to design a robot, but perhaps they can advise us how to prevent robots from discovering that, at least in their case, free will is just an illusion.

Could robots be Jewish—or practice any religion? Should they be equipped with religious sentiment?

That’s a very interesting question. Suppose it is true that religion benefits social behavior—though many argue that there’s more harm than good done in the name of religion. But suppose we come to the conclusion that religion is beneficial in terms of regulating human behavior; should we equip robots with such a component? I don’t see why not. Let’s give robots the illusion that there is a robot God, and they are going to be punished if they act improperly, and they should be nice to each other because other robots have feelings, too. All the emotional charges that we associate with religion and morality could be programmed in that way on a machine.

However, we should not forget that Jewishness is more than just a religion. It is a peoplehood and a history perhaps more than it is a religion. So your question “Could robots be Jewish?” translates immediately to the question “Could a community of robots attain a sense of collective identity forged by common history?” It would take unusually creative programmers to choreograph scripts like Exodus or Masada and implant in the robot’s software the unmistaken belief that he/she was part of that historical journey. Common narratives are the strongest bonds in human society, so if that would make a team of robots play a better game of soccer, I am all for it.

Okay, you just convinced me. The next generation of robots is going to lay tefillin and sing “Hatikva.” Note, however, that the moment we ask, “Can a robot be X?”—we find ourselves viewing X from a fresh new perspective, and we end up gaining a deeper understanding of what the essence of X is.

How can programming computers to understand causal inference change our lives?

Part of the answer is happening already, though not in the public eye, and part will take place in the near future, as a very visible part of our lives. In the first part I am including computer-assisted decision making, which has already significantly influenced the way drugs are being improved and the way social policies are being evaluated and enacted. The ability to access a huge amount of data, from diverse sources, and use it to answer causal questions such as “Should we operate on this patient?” brings personalized medicine closer to reality.

The second part is more interesting, because it will impact each and every one of us directly. The increased role of computer-assisted decision making has led several European countries to legislate a policy called General Data Protection Regulation. It gives every individual three rights: the right to know whether an automatic program participated in an important decision for one’s life (say, an employment or loan application), the right to challenge such a decision and the right to obtain an explanation as to how and why the decision was made. The latter is what interests us here because “explanation” is a causal notion (remember Adam and Eve) that machines are unable to produce unless they understand cause and effect. Machines that understand cause and effect imply machines that produce plausible explanations and machines that communicate as trusted partners with humans.

We’ve been using computers to make political predictions for years—so what will programming computers to understand causal inference change?

Your question hits precisely at the key difference between statistics and causation. The former predicts the future from passive observations, while the latter predicts the results of actions, interventions or policies. To predict the results of an election based on survey data is a statistical task, well studied and well ensconced in the old science. But to predict the results of raising the minimum wage is a causal task, for it involves active intervention, hence it cannot be accomplished using traditional methods; it requires the guidance of the new science of cause and effect.

Another class of tasks are problems involving counterfactuals, for example, to predict what the world would be like had we acted differently. A practical example in a political setting would be to identify swing voters—that is, voters who would change their vote if and only if contacted (say by campaign workers). Although data never tell us how a given voter would behave under different conditions, counterfactual-understanding computers can nevertheless identify, from data, which voter is likely to be in the “swing voters” class.

Has being Jewish led to your interest in cause and effect?

Yes, in addition to ingrained Jewish reverence for learning and innovation (chidushim), my generation was also blessed with an extra pinch of chutzpah—a necessary ingredient for shaking traditional thinking about cause and effect. My Jewish heritage helped me learn to question authority, and my Israeli upbringing made questioning authority a national anthem. I grew up in a society that had to impose its own authority on itself. We didn’t have experts or dogma. We left religion, and we had to choreograph a functioning society based on self-made values. We didn’t believe our rabbis. We definitely didn’t believe the British government. We didn’t believe the United Nations. So we had to question authority. That is one of the reasons that I was able to look at science and question the disparity between what people do and what they ought to do and ask, “How come it wasn’t done?”

This book seems to point t the most important scientific development of present times! It is a must-be-read for me. Thank you for your interview